Developing and deploying vision AI applications is complex and expensive. Organizations need data scientists and machine learning engineers to build training and inference pipelines based on unstructured data such as images and videos. With the acute shortage of skilled machine learning engineers, building and integrating intelligent vision AI applications has become expensive for enterprises.

On the other hand, companies such as Google, Intel, Meta, Microsoft, NVIDIA, and OpenAI are making pre-trained models available to customers. Pre-trained models like face detection, emotion detection, pose detection, and vehicle detection are openly available to developers to build intelligent vision-based applications. Many organizations have invested in CCTV, surveillance, and IP cameras for security. Though these cameras can be connected to existing pre-trained models, the plumbing needed to connect the dots is far too complex.

Building vision AI inference pipelines

Building a vision AI inference pipeline to derive insights from existing cameras and pre-trained models or custom models involves processing, encoding, and normalizing the video streams aligned with the target model. Once that’s in place, the inference outcome must be captured along with the metadata to deliver insights through visual dashboards and analytics.

For platform vendors, the vision AI inference pipeline presents an opportunity to build tools and development environments to connect the dots across the video sources, models, and analytics engine. If the development environment delivers a no-code/low-code approach, it further accelerates and simplifies the process.

IDG

IDGFigure 1. Building a vision AI inference pipeline with Vertex AI Vision.

About Vertex AI Vision

Google’s Vertex AI Vision lets organizations seamlessly integrate computer vision AI into applications without the plumbing and heavy lifting. It’s an integrated environment that combines video sources, machine learning models, and data warehouses to deliver insights and rich analytics. Customers can either use pre-trained models available within the environment or bring custom models trained in the Vertex AI platform.

IDG

IDGFigure 2. It is possible to use pre-trained models or custom models trained in the Vertex AI platform.

A Vertex AI Vision application starts with a blank canvas, which is used to build an AI vision inference pipeline by dragging and dropping components from a visual palette.

IDG

IDGFigure 3. Building a pipeline with drag-and-drop components.

The palette contains various connectors that include the camera/video streams, a collection of pre-trained models, specialized models targeting specific industry verticals, custom models built using AutoML or Vertex AI, and data stores in the form of BigQuery and AI Vision Warehouse.

According to Google Cloud, Vertex AI Vision has the following services:

- Vertex AI Vision Streams: An endpoint service for ingesting video streams and images across a geographically distributed network. Connect any camera or device from anywhere and let Google handle scaling and ingestion.

- Vertex AI Vision Applications: Developers can build extensive, auto-scaled media processing and analytics pipelines using this serverless orchestration platform.

- Vertex AI Vision Models: Prebuilt vision models for common analytics tasks, including occupancy counting, PPE detection, face blurring, and retail product recognition. Furthermore, users can build and deploy their own models trained within Vertex AI platform.

- Vertex AI Vision Warehouse: An integrated serverless rich-media storage system that combines Google search and managed video storage. Petabytes of video data can be ingested, stored, and searched within the warehouse.

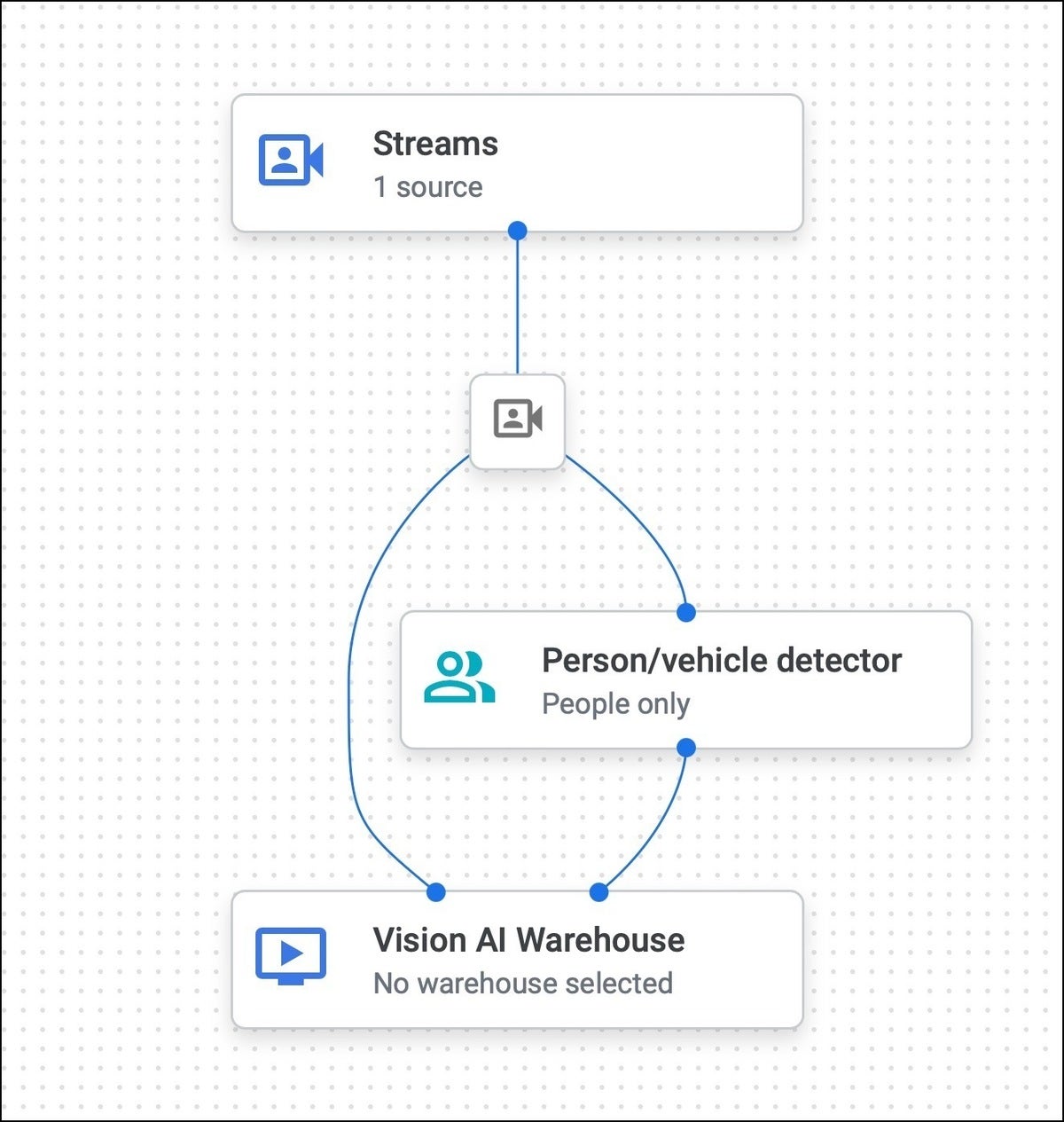

For example, the pipeline below ingests the video from a single source, forwards that to the person/vehicle counter, and stores the input and output (inference) metadata in AI Vision Warehouse for running simple queries. It can be replaced with BigQuery to integrate with existing applications or perform complex SQL-based queries.

IDG

IDGFigure 4. A sample pipeline built with Vertex AI Vision.

Deploying a Vertex AI Vision pipeline

Once the pipeline is built visually, it can be deployed to start performing inference. The green tick marks in the screenshot below indicate a successful deployment.

IDG

IDGFigure 5. Green tick marks indicate that the pipeline was deployed.

The next step is to start ingesting the video feed to trigger the inference. Google provides a command-line tool called vaictl to grab the video stream from a source and pass it to the Vertex AI Vision endpoint. It supports both static video files and RTSP streams based on H.264 encoding.

Once the pipeline is triggered, both the input and output streams can be monitored from the console, as shown.

IDG

IDGFigure 6. Monitoring input and output streams from the console.

Since the inference output is stored in the AI Vision Warehouse, it can be queried based on a search criterion. For example, the next screenshot shows frames containing at least five people or vehicles.

IDG

IDGFigure 7. A sample query for inference output.

Google provides an SDK to programmatically talk to the warehouse. BigQuery developers can use existing libraries to run advanced queries based on ANSI SQL.

Integrations and support for Vertex AI Vision at the edge

Vertex AI Vision has tight integration with Vertex AI, Google’s managed machine learning PaaS. Customers can train models either through AutoML or custom training. To add custom processing of the output, Google integrated Cloud Functions, which can manipulate the output to add annotations or additional metadata.

The true potential of the Vertex AI Vision platform lies in its no-code approach and the ability to integrate with other Google Cloud services such as BigQuery, Cloud Functions, and Vertex AI.

While Vertex AI Vision is an excellent step towards simplifying vision AI, more support is needed to deploy applications at the edge. Industry verticals such as healthcare, insurance, and automotive prefer to run vision AI pipelines at the edge to avoid latency and meet compliance. Adding support for the edge will become a key driver for Vertex AI Vision.

Copyright © 2022 IDG Communications, Inc.

Source link

Leave a Reply